#statistical forecasting techniques

Explore tagged Tumblr posts

Text

Demand Planning - Forecasting Techniques:

The basic ingredient of any demand plan is a statistical forecast. Statistical models and resulting forecasts are the building blocks of the planning process.

Although consensus and collaboration are key ingredients of a successful demand management program, statistical forecasting is the first step to creating the baseline plan. To this end, a good processes and software technologies become important. One of the key things you look for when you prepare a Request for Proposal (RFP) is to ensure that you cover all of the modeling algorithms and techniques which are relevant to your process. This depends on your industry and your specific business model.

Forecasting techniques can be broadly classified as:

Time Series Forecasting models consisting of exponential smoothing, Holt-Winters Multiplicative Smoothing, ARIMA models and Box-Jenkins Models, Logarithmic regression models

Promotional Planning Models that typically use event modeling methodologies and indicator variable models

Causal models that include a variety of Multiple Linear Regression Models and transfer function models

Probabilistic Models that often forecast the probability of a particular event happening in the future and these include Logit, Probit, and Tobit, models

Croston's Models to forecast intermittent demand. Here is a link to a semi-technical explanation of Croston's Method.

To know More, Visit Us:

0 notes

Text

Data Analysis: Turning Information into Insight

In nowadays’s digital age, statistics has come to be a vital asset for businesses, researchers, governments, and people alike. However, raw facts on its personal holds little value till it's far interpreted and understood. This is wherein records evaluation comes into play. Data analysis is the systematic manner of inspecting, cleansing, remodeling, and modeling facts with the objective of coming across beneficial information, drawing conclusions, and helping selection-making.

What Is Data Analysis In Research

What is Data Analysis?

At its middle, records analysis includes extracting meaningful insights from datasets. These datasets can variety from small and based spreadsheets to large and unstructured facts lakes. The primary aim is to make sense of data to reply questions, resolve issues, or become aware of traits and styles that are not without delay apparent.

Data evaluation is used in truely every enterprise—from healthcare and finance to marketing and education. It enables groups to make proof-based choices, improve operational efficiency, and advantage aggressive advantages.

Types of Data Analysis

There are several kinds of information evaluation, every serving a completely unique purpose:

1. Descriptive Analysis

Descriptive analysis answers the question: “What happened?” It summarizes raw facts into digestible codecs like averages, probabilities, or counts. For instance, a store might analyze last month’s sales to decide which merchandise achieved satisfactory.

2. Diagnostic Analysis

This form of evaluation explores the reasons behind beyond outcomes. It answers: “Why did it occur?” For example, if a agency sees a surprising drop in internet site visitors, diagnostic evaluation can assist pinpoint whether or not it changed into because of a technical problem, adjustments in search engine marketing rating, or competitor movements.

3. Predictive Analysis

Predictive analysis makes use of historical information to forecast destiny consequences. It solutions: “What is probable to occur?” This includes statistical models and system getting to know algorithms to pick out styles and expect destiny trends, such as customer churn or product demand.

4. Prescriptive Analysis

Prescriptive analysis provides recommendations primarily based on facts. It solutions: “What have to we do?” This is the maximum advanced type of analysis and often combines insights from predictive analysis with optimization and simulation techniques to manual selection-making.

The Data Analysis Process

The technique of information analysis commonly follows those steps:

1. Define the Objective

Before diving into statistics, it’s essential to without a doubt recognize the question or trouble at hand. A well-defined goal guides the entire analysis and ensures that efforts are aligned with the preferred outcome.

2. Collect Data

Data can come from numerous sources which includes databases, surveys, sensors, APIs, or social media. It’s important to make certain that the records is relevant, timely, and of sufficient high-quality.

3. Clean and Prepare Data

Raw information is regularly messy—it may comprise missing values, duplicates, inconsistencies, or mistakes. Data cleansing involves addressing these problems. Preparation may include formatting, normalization, or growing new variables.

Four. Analyze the Data

Tools like Excel, SQL, Python, R, or specialized software consisting of Tableau, Power BI, and SAS are typically used.

5. Interpret Results

Analysis isn't pretty much numbers; it’s about meaning. Interpreting effects involves drawing conclusions, explaining findings, and linking insights lower back to the authentic goal.

6. Communicate Findings

Insights have to be communicated effectively to stakeholders. Visualization tools including charts, graphs, dashboards, and reports play a vital position in telling the story behind the statistics.

7. Make Decisions and Take Action

The last aim of statistics analysis is to tell selections. Whether it’s optimizing a advertising marketing campaign, improving customer support, or refining a product, actionable insights flip data into real-global effects.

Tools and Technologies for Data Analysis

A big selection of gear is available for facts analysis, each suited to distinct tasks and talent levels:

Excel: Great for small datasets and short analysis. Offers capabilities, pivot tables, and charts.

Python: Powerful for complicated facts manipulation and modeling. Popular libraries consist of Pandas, NumPy, Matplotlib, and Scikit-learn.

R: A statistical programming language extensively used for statistical analysis and statistics visualization.

SQL: Essential for querying and handling information saved in relational databases.

Tableau & Power BI: User-friendly enterprise intelligence equipment that flip facts into interactive visualizations and dashboards.

Healthcare: Analyzing affected person statistics to enhance treatment plans, predict outbreaks, and control resources.

Finance: Detecting fraud, coping with threat, and guiding investment techniques.

Retail: Personalizing advertising campaigns, managing inventory, and optimizing pricing.

Sports: Enhancing performance through participant records and game analysis.

Public Policy: Informing choices on schooling, transportation, and financial improvement.

Challenges in Data Analysis

Data Quality: Incomplete, old, or incorrect information can lead to deceptive conclusions.

Data Privacy: Handling sensitive records requires strict adherence to privacy guidelines like GDPR.

Skill Gaps: There's a developing demand for skilled information analysts who can interpret complicated facts sets.

Integration: Combining facts from disparate resources may be technically hard.

Bias and Misinterpretation: Poorly designed analysis can introduce bias or lead to wrong assumptions.

The Future of Data Analysis

As facts keeps to grow exponentially, the sector of facts analysis is evolving rapidly. Emerging developments include:

Artificial Intelligence (AI) & Machine Learning: Automating evaluation and producing predictive fashions at scale.

Real-Time Analytics: Enabling decisions based totally on live data streams for faster reaction.

Data Democratization: Making records handy and understandable to everybody in an business enterprise

2 notes

·

View notes

Text

Short-Term vs. Long-Term Data Analytics Course in Delhi: Which One to Choose?

In today’s digital world, data is everywhere. From small businesses to large organizations, everyone uses data to make better decisions. Data analytics helps in understanding and using this data effectively. If you are interested in learning data analytics, you might wonder whether to choose a short-term or a long-term course. Both options have their benefits, and your choice depends on your goals, time, and career plans.

At Uncodemy, we offer both short-term and long-term data analytics courses in Delhi. This article will help you understand the key differences between these courses and guide you to make the right choice.

What is Data Analytics?

Data analytics is the process of examining large sets of data to find patterns, insights, and trends. It involves collecting, cleaning, analyzing, and interpreting data. Companies use data analytics to improve their services, understand customer behavior, and increase efficiency.

There are four main types of data analytics:

Descriptive Analytics: Understanding what has happened in the past.

Diagnostic Analytics: Identifying why something happened.

Predictive Analytics: Forecasting future outcomes.

Prescriptive Analytics: Suggesting actions to achieve desired outcomes.

Short-Term Data Analytics Course

A short-term data analytics course is a fast-paced program designed to teach you essential skills quickly. These courses usually last from a few weeks to a few months.

Benefits of a Short-Term Data Analytics Course

Quick Learning: You can learn the basics of data analytics in a short time.

Cost-Effective: Short-term courses are usually more affordable.

Skill Upgrade: Ideal for professionals looking to add new skills without a long commitment.

Job-Ready: Get practical knowledge and start working in less time.

Who Should Choose a Short-Term Course?

Working Professionals: If you want to upskill without leaving your job.

Students: If you want to add data analytics to your resume quickly.

Career Switchers: If you want to explore data analytics before committing to a long-term course.

What You Will Learn in a Short-Term Course

Introduction to Data Analytics

Basic Tools (Excel, SQL, Python)

Data Visualization (Tableau, Power BI)

Basic Statistics and Data Interpretation

Hands-on Projects

Long-Term Data Analytics Course

A long-term data analytics course is a comprehensive program that provides in-depth knowledge. These courses usually last from six months to two years.

Benefits of a Long-Term Data Analytics Course

Deep Knowledge: Covers advanced topics and techniques in detail.

Better Job Opportunities: Preferred by employers for specialized roles.

Practical Experience: Includes internships and real-world projects.

Certifications: You may earn industry-recognized certifications.

Who Should Choose a Long-Term Course?

Beginners: If you want to start a career in data analytics from scratch.

Career Changers: If you want to switch to a data analytics career.

Serious Learners: If you want advanced knowledge and long-term career growth.

What You Will Learn in a Long-Term Course

Advanced Data Analytics Techniques

Machine Learning and AI

Big Data Tools (Hadoop, Spark)

Data Ethics and Governance

Capstone Projects and Internships

Key Differences Between Short-Term and Long-Term Courses

FeatureShort-Term CourseLong-Term CourseDurationWeeks to a few monthsSix months to two yearsDepth of KnowledgeBasic and Intermediate ConceptsAdvanced and Specialized ConceptsCostMore AffordableHigher InvestmentLearning StyleFast-PacedDetailed and ComprehensiveCareer ImpactQuick Entry-Level JobsBetter Career Growth and High-Level JobsCertificationBasic CertificateIndustry-Recognized CertificationsPractical ProjectsLimitedExtensive and Real-World Projects

How to Choose the Right Course for You

When deciding between a short-term and long-term data analytics course at Uncodemy, consider these factors:

Your Career Goals

If you want a quick job or basic knowledge, choose a short-term course.

If you want a long-term career in data analytics, choose a long-term course.

Time Commitment

Choose a short-term course if you have limited time.

Choose a long-term course if you can dedicate several months to learning.

Budget

Short-term courses are usually more affordable.

Long-term courses require a bigger investment but offer better returns.

Current Knowledge

If you already know some basics, a short-term course will enhance your skills.

If you are a beginner, a long-term course will provide a solid foundation.

Job Market

Short-term courses can help you get entry-level jobs quickly.

Long-term courses open doors to advanced and specialized roles.

Why Choose Uncodemy for Data Analytics Courses in Delhi?

At Uncodemy, we provide top-quality training in data analytics. Our courses are designed by industry experts to meet the latest market demands. Here’s why you should choose us:

Experienced Trainers: Learn from professionals with real-world experience.

Practical Learning: Hands-on projects and case studies.

Flexible Schedule: Choose classes that fit your timing.

Placement Assistance: We help you find the right job after course completion.

Certification: Receive a recognized certificate to boost your career.

Final Thoughts

Choosing between a short-term and long-term data analytics course depends on your goals, time, and budget. If you want quick skills and job readiness, a short-term course is ideal. If you seek in-depth knowledge and long-term career growth, a long-term course is the better choice.

At Uncodemy, we offer both options to meet your needs. Start your journey in data analytics today and open the door to exciting career opportunities. Visit our website or contact us to learn more about our Data Analytics course in delhi.

Your future in data analytics starts here with Uncodemy!

2 notes

·

View notes

Text

Business Analytics vs. Data Science: Understanding the Key Differences

In today's data-driven world, terms like "business analytics" and "data science" are often used interchangeably. However, while they share a common goal of extracting insights from data, they are distinct fields with different focuses and methodologies. Let's break down the key differences to help you understand which path might be right for you.

Business Analytics: Focusing on the Present and Past

Business analytics primarily focuses on analyzing historical data to understand past performance and inform current business decisions. It aims to answer questions like:

What happened?

Why did it happen?

What is happening now?

Key characteristics of business analytics:

Descriptive and Diagnostic: It uses techniques like reporting, dashboards, and data visualization to summarize and explain past trends.

Structured Data: It often works with structured data from databases and spreadsheets.

Business Domain Expertise: A strong understanding of the specific business domain is crucial.

Tools: Business analysts typically use tools like Excel, SQL, Tableau, and Power BI.

Focus: Optimizing current business operations and improving efficiency.

Data Science: Predicting the Future and Building Models

Data science, on the other hand, focuses on building predictive models and developing algorithms to forecast future outcomes. It aims to answer questions like:

What will happen?

How can we make it happen?

Key characteristics of data science:

Predictive and Prescriptive: It uses machine learning, statistical modeling, and AI to predict future trends and prescribe optimal actions.

Unstructured and Structured Data: It can handle both structured and unstructured data from various sources.

Technical Proficiency: Strong programming skills (Python, R) and a deep understanding of machine learning algorithms are essential.

Tools: Data scientists use programming languages, machine learning libraries, and big data technologies.

Focus: Developing innovative solutions, building AI-powered products, and driving long-term strategic initiatives.

Key Differences Summarized:

Which Path is Right for You?

Choose Business Analytics if:

You are interested in analyzing past data to improve current business operations.

You have a strong understanding of a specific business domain.

You prefer working with structured data and using visualization tools.

Choose Data Science if:

You are passionate about building predictive models and developing AI-powered solutions.

You have a strong interest in programming and machine learning.

You enjoy working with both structured and unstructured data.

Xaltius Academy's Data Science & AI Course:

If you're leaning towards data science and want to delve into machine learning and AI, Xaltius Academy's Data Science & AI course is an excellent choice. This program equips you with the necessary skills and knowledge to become a proficient data scientist, covering essential topics like:

Python programming

Machine learning algorithms

Data visualization

And much more!

By understanding the distinct roles of business analytics and data science, you can make an informed decision about your career path and leverage the power of data to drive success.

2 notes

·

View notes

Text

Why Should We Consider Using Predictive Analysis in Travel?

This is a combination of past data along with present-day data, artificial intelligence and statistical models to forecast customers' expectations and market conditions in the travel industry. It is an evolutionary transformative approach that assists travel businesses in performing efficiently and providing customers with solutions tailored to their needs.

How Does Predictive Analysis Work in the Travel Industry?

The concept of predictive analysis for the travel industry is the use of complex patterns and statistical information from the past to estimate future actions, behaviors, and trends of consumers. The benefits of this technology are, therefore, increased efficiency of resource use and improved customer experience and revenue.

What Predictive Analytics is used in the Travel Industry?

Analytical models and artificial intelligence are incorporated with statistical methods in predictive analytics to analyze data about the past and the present in the travel industry. This enables travel companies to forecast customer requirements and market development and even enhance their organizational effectiveness.

Data-Driven Decision-making Significance & Impact in Travels

This business intelligence tool guides travel organizations in making the right strategies by examining past customer data, market situations, and external circumstances such as climate or economic circumstances. This makes it possible for businesses to maintain their flexibility in highly competitive business environments.

Personalization Using Forecasting

Personalization is one of the main uses of predictive analytics. An understanding of customers’ needs helps travel businesses decide on such strategies as marketing messages, promotional destination suggestions, and variable high/low price options.

Improving Company’s Performance

Sensitivity to operational efficiency is another advantage. Airlines forecast their maintenance requirements so that unnecessary airplane out-of-service time is minimized whilst optimizing employees in a hotel to suit expected room use, leading to better service delivery and cost efficiency.

What are examples of predictive analytics in travel?

Several cases of Predictive Analysis in Travel reflect its applicability to various business issues, including the pricing strategy along with customer acceptance. Here are some details of this application across the industry.

Dynamic Pricing Strategies

Pricing for products or services is continually changing to meet the demand, influenced by features such as time of year, customer preferences, and trends. This happens in air ticketing services and hotel reservations.

Predicting Travel Demand

Predictive analytics relies on historical information as well as inputs received in real time to predict the demand for individual places or services. It enables travel companies to plan inventory and marketing ahead of time.

Customer Retention Analysis

Travel organizations apply big data techniques to switch customers who are likely to churn, and they do that by offering special loyalty programs or individual offers.

Managing Operational Risks

Aviation managers and transportation companies use forecasting techniques to prevent possible disasters like weather disturbances or equipment breakdowns and ensure a proper flow of operations.

Marketing Campaign

They aid marketing to get the optimum value for the amount invested to reach audiences that are likely to respond to a given campaign.

What Is AI for Predictive Analytics in Travel?

AI for predictive analytics in travel aims to analyze large volumes of data and extract patterns and insights that are useful in predicting travel trends. This is because it allows the business to double the ways through which it can better deliver, operate, and even forecast the market far better than any conventional.

What Are the Use Cases of Predictive Analysis in Travel?

Examples of the application of predictive analytics across the travel industry range from operational optimization to engagement. Looking at the data, challenges, and opportunities can be identified, and travel companies can then respond.

Airline Flight Plan / Flight Path Optimization

Predictive analytics helps airline companies fix the best routes and time to save costs and satisfy their customers.

Customer loyalty programs as a concept

Travel companies use the predictive model to create efficiencies in loyalty programs that appeal to regular traveling clientele.

The art of destination marketing needs to be enhanced.

Marketing departments within tourism boards and travel companies look for trends in data for the best places tourists are likely to visit when spending their money on travel and then market accordingly to avoid wasting the most amount of money on a particular place that no one wants to visit.

Conclusion: How Predictive Analysis Shapes the Travel Industry

The broad concept of using advanced data analysis to drive better decision-making, improve customer satisfaction, and improve operational performance has reshaped the travel industry. This is a strategy that enables a business entity to forecast the market needs and allocate resources in an appropriate manner to be in a position to design and deliver unique products to the market, hence very relevant to the current market environment.

However, in the future, as the industry moves forward, predictive analytics will be of higher importance when facing some of the issues, including demand volatility, organizational inefficiencies, and customer loyalty. Drawing upon the concepts of AI and machine learning, travel firms can forecast developments, control possible adverse effects, and ultimately tap into new sources of revenue.

2 notes

·

View notes

Text

Can statistics and data science methods make predicting a football game easier?

Hi,

Statistics and data science methods can significantly enhance the ability to predict the outcomes of football games, though they cannot guarantee results due to the inherent unpredictability of sports. Here’s how these methods contribute to improving predictions:

Data Collection and Analysis:

Collecting and analyzing historical data on football games provides a basis for understanding patterns and trends. This data can include player statistics, team performance metrics, match outcomes, and more. Analyzing this data helps identify factors that influence game results and informs predictive models.

Feature Engineering:

Feature engineering involves creating and selecting relevant features (variables) that contribute to the prediction of game outcomes. For football, features might include team statistics (e.g., goals scored, possession percentage), player metrics (e.g., player fitness, goals scored), and contextual factors (e.g., home/away games, weather conditions). Effective feature engineering enhances the model’s ability to capture important aspects of the game.

Predictive Modeling:

Various predictive models can be used to forecast football game outcomes. Common models include:

Logistic Regression: This model estimates the probability of a binary outcome (e.g., win or lose) based on input features.

Random Forest: An ensemble method that builds multiple decision trees and aggregates their predictions. It can handle complex interactions between features and improve accuracy.

Support Vector Machines (SVM): A classification model that finds the optimal hyperplane to separate different classes (e.g., win or lose).

Poisson Regression: Specifically used for predicting the number of goals scored by teams, based on historical goal data.

Machine Learning Algorithms:

Advanced machine learning algorithms, such as gradient boosting and neural networks, can be employed to enhance predictive accuracy. These algorithms can learn from complex patterns in the data and improve predictions over time.

Simulation and Monte Carlo Methods:

Simulation techniques and Monte Carlo methods can be used to model the randomness and uncertainty inherent in football games. By simulating many possible outcomes based on historical data and statistical models, predictions can be made with an understanding of the variability in results.

Model Evaluation and Validation:

Evaluating the performance of predictive models is crucial. Metrics such as accuracy, precision, recall, and F1 score can assess the model’s effectiveness. Cross-validation techniques ensure that the model generalizes well to new, unseen data and avoids overfitting.

Consideration of Uncertainty:

Football games are influenced by numerous unpredictable factors, such as injuries, referee decisions, and player form. While statistical models can account for many variables, they cannot fully capture the uncertainty and randomness of the game.

Continuous Improvement:

Predictive models can be continuously improved by incorporating new data, refining features, and adjusting algorithms. Regular updates and iterative improvements help maintain model relevance and accuracy.

In summary, statistics and data science methods can enhance the ability to predict football game outcomes by leveraging historical data, creating relevant features, applying predictive modeling techniques, and continuously refining models. While these methods improve the accuracy of predictions, they cannot eliminate the inherent unpredictability of sports. Combining statistical insights with domain knowledge and expert analysis provides the best approach for making informed predictions.

3 notes

·

View notes

Text

Business Potential with Data Management and Analytics Services

In today's data-driven world, businesses are increasingly relying on data to make informed decisions and drive strategic growth. As organizations collect and generate vast amounts of data, the need for effective data management and analytics services becomes paramount. This is where data analytics consulting services play a crucial role. By harnessing the power of data, businesses can gain valuable insights, enhance operational efficiency, and achieve a competitive edge.

The Importance of Data Management

Data management is the foundation upon which successful data analytics is built. It involves the process of collecting, storing, and organizing data in a manner that ensures its accuracy, accessibility, and security. Effective data management allows organizations to maintain high-quality data that is essential for accurate analysis and decision-making.

Data Collection and Integration

The first step in data management is collecting data from various sources. This can include transactional data, customer interactions, social media, and more. Integrating these diverse data sources into a unified system is crucial for creating a comprehensive view of the business landscape. Data analytics consulting services assist organizations in designing and implementing robust data integration strategies, ensuring that all relevant data is captured and consolidated efficiently.

Data Storage and Organization

Once data is collected, it needs to be stored and organized in a structured manner. This involves setting up databases and data warehouses that can handle large volumes of data while maintaining data integrity. Data management services ensure that data is stored securely and is easily retrievable when needed. Effective data organization also involves categorizing data into meaningful segments, making it easier to analyze and derive insights.

Data Quality and Governance

Maintaining high data quality is essential for accurate analysis. Data management services focus on implementing data quality frameworks that ensure data is accurate, complete, and consistent. Data governance practices are also put in place to manage data policies, standards, and compliance. This helps organizations avoid data-related issues and ensures that data analytics efforts are based on reliable information.

Leveraging Data Analytics

Once data is properly managed, the next step is to leverage it through advanced analytics. Data analytics services help businesses transform raw data into actionable insights that can drive decision-making and strategic planning.

Descriptive Analytics

Descriptive analytics involves analyzing historical data to understand past performance and identify trends. This type of analysis provides a clear picture of what has happened in the past and helps organizations gain insights into their operations. Data analytics consulting services use various statistical and analytical techniques to uncover patterns and trends in historical data, enabling businesses to make data-driven decisions.

Predictive Analytics

Predictive analytics goes a step further by using historical data to forecast future outcomes. By applying statistical models and machine learning algorithms, businesses can predict trends, customer behavior, and potential risks. This proactive approach allows organizations to anticipate challenges and opportunities, making it possible to take preemptive actions. Data analytics consulting services provide expertise in building and deploying predictive models that can enhance business forecasting and planning.

Prescriptive Analytics

Prescriptive analytics offers recommendations for actions based on data insights. It involves using optimization techniques and simulation models to suggest the best course of action for achieving desired outcomes. This type of analytics helps businesses make informed decisions by providing actionable recommendations that align with their strategic goals. Data analytics consulting services assist organizations in developing prescriptive models that guide decision-making and improve overall performance.

The Role of Data Analytics Consulting Services

Data analytics consulting services are essential for helping businesses navigate the complexities of data management and analytics. These services provide expertise and support in various aspects of data analytics, including strategy development, implementation, and optimization.

Strategy Development

Data analytics consultants work closely with organizations to develop data strategies that align with their business objectives. This involves identifying key performance indicators (KPIs), setting data goals, and defining the scope of analytics initiatives. By creating a comprehensive data strategy, consultants ensure that data analytics efforts are focused and effective.

Implementation and Optimization

Once the strategy is in place, data analytics consultants assist with the implementation of data management systems and analytics tools. This includes setting up data infrastructure, integrating data sources, and deploying analytics solutions. Consultants also provide ongoing support to optimize data processes and ensure that analytics tools are used effectively.

Insights and Recommendations

One of the primary benefits of data analytics consulting services is the ability to generate actionable insights and recommendations. Consultants analyze data to uncover trends, identify opportunities, and provide recommendations for improving business performance. These insights help organizations make informed decisions and drive strategic growth.

Conclusion

In an era where data is a valuable asset, effective data management and analytics services are crucial for unlocking business potential. By leveraging data analytics consulting services, organizations can ensure that their data is well-managed, their analytics efforts are targeted, and their decision-making is driven by actionable insights. As businesses continue to navigate the complexities of the data landscape, investing in data management and analytics services will remain a key factor in achieving long-term success.

5 notes

·

View notes

Text

Decoding Time Series Analysis: Navigating Complex Numerical Challenges in Statistics Without the Fuss of Equations

Time Series Analysis stands as a robust and indispensable tool within the realm of statistics, providing us with the means to unveil intricate patterns and trends concealed within temporal data. In the course of this enlightening blog post, we shall embark on a comprehensive exploration of two demanding numerical questions at the graduate level. Our objective is to delve into the intricacies of dissecting time series data, all while steering clear of any daunting equations. So, fasten your analytical seatbelts as we journey through the rich landscape of these real-world problems, armed with the knowledge that will undoubtedly help with statistics assignment using R. Let's collectively hone our statistical acumen and confront these challenges head-on!

Question 1:

Consider a time series dataset representing the monthly sales of a product over the last three years. The sales data is as follows:

Year 1:

Month 1: 120 units

Month 2: 150 units

Month 3: 180 units

...

Month 12: 200 units

Year 2:

Month 13: 220 units

Month 14: 250 units

Month 15: 280 units

...

Month 24: 300 units

Year 3:

Month 25: 320 units

Month 26: 350 units

Month 27: 380 units

...

Month 36: 400 units

a) Calculate the moving average for a window size of 3 months for the entire time series.

b) Identify any seasonality patterns in the data and explain how they may impact sales forecasting.

c) Use a suitable decomposition method to break down the time series into its trend, seasonal, and residual components.

Answer:

a) Moving Average Calculation:

For Month 3, Moving Average = (120 + 150 + 180) / 3 = 150 units

For Month 4, Moving Average = (150 + 180 + 200) / 3 = 176.67 units

Continue this calculation for the entire time series.

b) Seasonality Patterns:

Seasonality can be observed by comparing the average sales for each month across the three years.

For example, if the average sales for January is consistently lower than other months, it indicates a seasonality pattern.

c) Decomposition:

Use a method such as additive or multiplicative decomposition to separate the time series into trend, seasonal, and residual components.

The trend component represents the overall direction of sales.

The seasonal component captures recurring patterns.

The residual component accounts for random fluctuations.

Question 2:

You are provided with a monthly time series dataset representing the stock prices of a company over the last five years. The stock prices are as follows:

Year 1: $50, $55, $60, $52, $48, ..., $58

Year 2: $60, $65, $70, $62, $58, ..., $68

Year 3: $70, $75, $80, $72, $68, ..., $78

Year 4: $80, $85, $90, $82, $78, ..., $88

Year 5: $90, $95, $100, $92, $88, ..., $98

a) Calculate the percentage change in stock prices from one year to the next.

b) Apply a suitable smoothing technique (e.g., exponential smoothing) to forecast the stock prices for the next three months.

c) Assess the stationarity of the time series and suggest any transformations needed for better forecasting.

Answer:

a) Percentage Change Calculation:

For Year 2, Percentage Change = [(Stock Price in Year 2 - Stock Price in Year 1) / Stock Price in Year 1] * 100

Repeat this calculation for the subsequent years.

b) Exponential Smoothing:

Use the exponential smoothing formula to forecast the stock prices for the next three months.

c) Stationarity Assessment:

Use statistical tests or visual inspection to assess stationarity.

If non-stationarity is detected, consider transformations such as differencing to achieve stationarity for better forecasting.

Conclusion:

As we conclude our exploration of these graduate-level time series analysis questions, we've unraveled the complexities of analyzing sales and stock price data. From moving averages to decomposition and from percentage change to exponential smoothing, these exercises showcase the versatility and power of time series analysis in extracting meaningful insights from temporal datasets. Armed with these skills, statisticians and data analysts can make informed predictions and contribute to sound decision-making in various fields. So, next time you encounter a time series conundrum, approach it with confidence and the analytical prowess gained from mastering these challenging questions.

#R Assignment Help#statistics assignment help#online assignment help#Education#university#academic success

7 notes

·

View notes

Text

Building Ethical AI: Challenges and Solutions

Artificial Intelligence (AI) is transforming industries worldwide, creating opportunities for innovation, efficiency, and growth. According to recent statistics, the global AI market is expected to grow from $59.67 billion in 2021 to $422.37 billion by 2028, at a CAGR of 39.4% during the forecast period. Despite the tremendous potential, developing AI technologies comes with significant ethical challenges. Ensuring that AI systems are designed and implemented ethically is crucial to maximizing their benefits while minimizing risks. This article explores the challenges in building ethical AI and offers solutions to address these issues effectively.

Understanding Ethical AI

Ethical AI refers to the development and deployment of AI systems in a manner that aligns with widely accepted moral principles and societal values. It encompasses several aspects, including fairness, transparency, accountability, privacy, and security. Ethical AI aims to prevent harm and ensure that AI technologies are used to benefit society as a whole.

The Importance of Ethical AI

Trust and Adoption: Ethical AI builds trust among users and stakeholders, encouraging widespread adoption.

Legal Compliance: Adhering to ethical guidelines helps companies comply with regulations and avoid legal repercussions.

Social Responsibility: Developing ethical AI reflects a commitment to social responsibility and the well-being of society.

Challenges in Building Ethical AI

1. Bias and Fairness

AI systems can inadvertently perpetuate or even amplify existing biases present in the training data. This can lead to unfair treatment of individuals based on race, gender, age, or other attributes.

Solutions:

Diverse Data Sets: Use diverse and representative data sets to train AI models.

Bias Detection Tools: Implement tools and techniques to detect and mitigate biases in AI systems.

Regular Audits: Conduct regular audits to ensure AI systems remain fair and unbiased.

2. Transparency and Explainability

AI systems, especially those based on deep learning, can be complex and opaque, making it difficult to understand their decision-making processes.

Solutions:

Explainable AI (XAI): Develop and use explainable AI models that provide clear and understandable insights into how decisions are made.

Documentation: Maintain thorough documentation of AI models, including data sources, algorithms, and decision-making criteria.

User Education: Educate users and stakeholders about how AI systems work and the rationale behind their decisions.

3. Accountability

Determining accountability for AI-driven decisions can be challenging, particularly when multiple entities are involved in developing and deploying AI systems.

Solutions:

Clear Governance: Establish clear governance structures that define roles and responsibilities for AI development and deployment.

Ethical Guidelines: Develop and enforce ethical guidelines and standards for AI development.

Third-Party Audits: Engage third-party auditors to review and assess the ethical compliance of AI systems.

4. Privacy and Security

AI systems often rely on vast amounts of data, raising concerns about privacy and data security.

Solutions:

Data Anonymization: Use data anonymization techniques to protect individual privacy.

Robust Security Measures: Implement robust security measures to safeguard data and AI systems from breaches and attacks.

Consent Management: Ensure that data collection and use comply with consent requirements and privacy regulations.

5. Ethical Design and Implementation

The design and implementation of AI systems should align with ethical principles from the outset, rather than being an afterthought.

Solutions:

Ethical by Design: Incorporate ethical considerations into the design and development process from the beginning.

Interdisciplinary Teams: Form interdisciplinary teams that include ethicists, sociologists, and other experts to guide ethical AI development.

Continuous Monitoring: Continuously monitor AI systems to ensure they adhere to ethical guidelines throughout their lifecycle.

AI Development Companies and Ethical AI

AI development companies play a crucial role in promoting ethical AI. By adopting ethical practices, these companies can lead the way in creating AI technologies that benefit society. Here are some key steps that AI development companies can take to build ethical AI:

Promoting Ethical Culture

Leadership Commitment: Ensure that leadership is committed to ethical AI and sets a positive example for the entire organization.

Employee Training: Provide training on ethical AI practices and the importance of ethical considerations in AI development.

Engaging with Stakeholders

Stakeholder Involvement: Involve stakeholders, including users, in the AI development process to gather diverse perspectives and address ethical concerns.

Feedback Mechanisms: Establish mechanisms for stakeholders to provide feedback and report ethical concerns.

Adopting Ethical Standards

Industry Standards: Adopt and adhere to industry standards and best practices for ethical AI development.

Collaborative Efforts: Collaborate with other organizations, research institutions, and regulatory bodies to advance ethical AI standards and practices.

Conclusion

Building ethical AI is essential for ensuring that AI technologies are used responsibly and for the benefit of society. The challenges in creating ethical AI are significant, but they can be addressed through concerted efforts and collaboration. By focusing on bias and fairness, transparency and explainability, accountability, privacy and security, and ethical design, AI development company can lead the way in developing AI systems that are trustworthy, fair, and beneficial. As AI continues to evolve, ongoing commitment to ethical principles will be crucial in navigating the complex landscape of AI development and deployment.

2 notes

·

View notes

Text

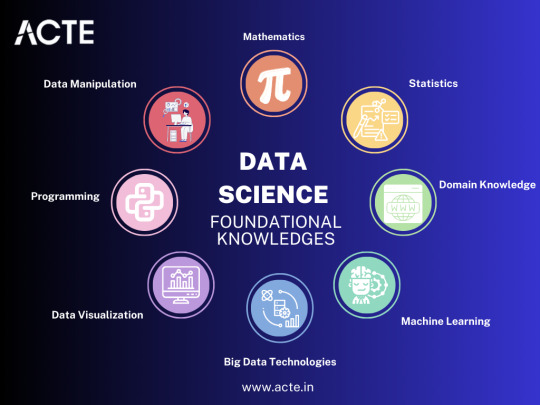

Exploring the Depths of Data Science: My Journey into Advanced Topics

In my journey through the ever-evolving landscape of data science, I've come to realize that the possibilities are as vast as the data itself. As I venture deeper into this realm, I find myself irresistibly drawn to the uncharted territories of advanced data science topics. The data universe is a treasure trove of intricate patterns, concealed insights, and complex challenges just waiting to be unraveled. This exploration isn't merely about expanding my knowledge; it's about discovering the profound impact that data can have on our world.

A. Setting the Stage for Advanced Data Science Exploration

Data science has transcended its initial boundaries of basic analyses and simple visualizations. It has evolved into a field that delves into the intricacies of machine learning, deep learning, big data, and more. Advanced data science is where we unlock the true potential of data, making predictions, uncovering hidden trends, and driving innovation.

B. The Evolving Landscape of Data Science

The field of data science is in a perpetual state of flux, with new techniques, tools, and methodologies emerging constantly. The boundaries of what we can achieve with data are continually expanding, offering exciting opportunities to explore data-driven solutions for increasingly complex problems.

C.My Motivation for Diving into Advanced Topics

Fueled by an insatiable curiosity and a desire to make a meaningful impact, I've embarked on a journey to explore advanced data science topics. The prospect of unearthing insights that could reshape industries, enhance decision-making, and contribute to societal progress propels me forward on this thrilling path.

II. Going Beyond the Basics: A Recap of Foundational Knowledge

Before diving headfirst into advanced topics, it's paramount to revisit the fundamentals that serve as the bedrock of data science. This refresher not only reinforces our understanding but also equips us to confront the more intricate challenges that lie ahead.

A. Revisiting the Core Concepts of Data Science

From the nitty-gritty of data collection and cleaning to the art of exploratory analysis and visualization, the core concepts of data science remain indomitable. These foundational skills provide us with a sturdy platform upon which we construct our advanced data science journey.

B. The Importance of a Strong Foundation for Advanced Exploration

Just as a towering skyscraper relies on a solid foundation to reach great heights, advanced data science hinges on a strong understanding of the basics. Without this firm grounding, the complexities of advanced techniques can quickly become overwhelming.

C. Reflecting on My Own Data Science Journey

When I look back on my personal data science journey, it's evident that each step I took paved the way for the next. As I progressed from being a novice to an intermediate practitioner, my hunger for knowledge and my drive to tackle more intricate challenges naturally led me toward the realm of advanced topics.

III. The Path to Mastery: Advanced Statistical Analysis

Advanced statistical analysis takes us far beyond the realm of simple descriptive statistics. It empowers us to draw nuanced insights from data and make informed decisions with a heightened level of confidence.

A. An Overview of Advanced Statistical Techniques

Advanced statistical techniques encompass the realm of multivariate analysis, time series forecasting, and more. These methods enable us to capture intricate relationships within data, providing us with a richer and more profound perspective.

B. Bayesian Statistics and Its Applications

Bayesian statistics offers a unique perspective on probability, allowing us to update our beliefs as new data becomes available. This powerful framework finds applications in diverse fields such as medical research, finance, and even machine learning.

C. The Role of Hypothesis Testing in Advanced Data Analysis

Hypothesis testing takes on a more intricate form in advanced data analysis. It involves designing robust experiments, grasping the nuances of p-values, and addressing the challenges posed by multiple comparisons.

IV. Predictive Modeling: Beyond Regression

While regression remains an enduring cornerstone of predictive modeling, the world of advanced data science introduces us to a spectrum of modeling techniques that can elegantly capture the complex relationships concealed within data.

A. A Deeper Dive into Predictive Modeling

Predictive modeling transcends the simplicity of linear regression, offering us tools like decision trees, random forests, and gradient boosting. These techniques furnish us with the means to make more precise predictions for intricate data scenarios.

B. Advanced Regression Techniques and When to Use Them

In the realm of advanced regression, we encounter techniques such as Ridge, Lasso, and Elastic Net regression. These methods effectively address issues of multicollinearity and overfitting, ensuring that our models remain robust and reliable.

C. Embracing Ensemble Methods for Enhanced Predictive Accuracy

Ensemble methods, a category of techniques, ingeniously combine multiple models to achieve higher predictive accuracy. Approaches like bagging, boosting, and stacking harness the strengths of individual models, resulting in a formidable ensemble.

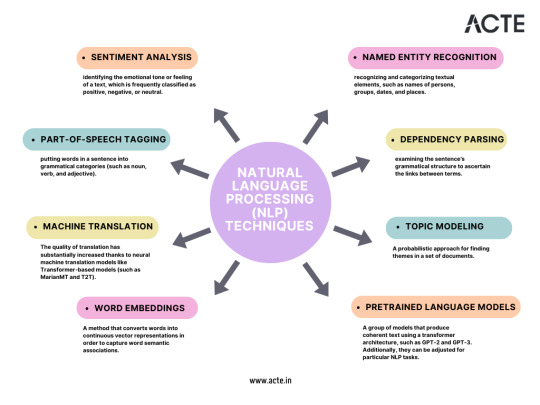

V. The Power of Unstructured Data: Natural Language Processing (NLP)

Unstructured text data, abundant on the internet, conceals a trove of valuable information. NLP equips us with the tools to extract meaning, sentiment, and insights from text.

A. Understanding the Complexities of Unstructured Text Data

Text data is inherently messy and nuanced, making its analysis a formidable challenge. NLP techniques, including tokenization, stemming, and lemmatization, empower us to process and decipher text data effectively.

B. Advanced NLP Techniques, Including Sentiment Analysis and Named Entity Recognition

Sentiment analysis gauges the emotions expressed in text, while named entity recognition identifies entities like names, dates, and locations. These advanced NLP techniques find applications in diverse fields such as marketing, social media analysis, and more.

C. Real-World Applications of NLP in Data Science

NLP's applications span from dissecting sentiment in customer reviews to generating human-like text with deep learning models. These applications not only drive decision-making but also enhance user experiences.

VI. Deep Learning and Neural Networks

At the heart of deep learning lies the neural network architecture, enabling us to tackle intricate tasks like image recognition, language translation, and even autonomous driving.

A. Exploring the Neural Network Architecture

Grasping the components of a neural network—layers, nodes, and weights—forms the foundation for comprehending the intricacies of deep learning models.

B. Advanced Deep Learning Concepts like CNNs and RNNs

Convolutional Neural Networks (CNNs) excel at image-related tasks, while Recurrent Neural Networks (RNNs) proficiently handle sequences like text and time series data. These advanced architectures amplify model performance, expanding the horizons of what data-driven technology can accomplish.

C. Leveraging Deep Learning for Complex Tasks like Image Recognition and Language Generation

Deep learning powers image recognition in self-driving cars, generates human-like text, and translates languages in real time. These applications redefine what's possible with data-driven technology, propelling us into an era of boundless potential.

VII. Big Data and Distributed Computing

As data scales to unprecedented sizes, the challenges of storage, processing, and analysis necessitate advanced solutions like distributed computing frameworks.

A. Navigating the Challenges of Big Data in Data Science

The era of big data demands a paradigm shift in how we handle, process, and analyze information. Traditional methods quickly become inadequate, making way for innovative solutions to emerge.

B. Introduction to Distributed Computing Frameworks like Apache Hadoop and Spark

Distributed computing frameworks such as Apache Hadoop and Spark empower us to process massive datasets across clusters of computers. These tools enable efficient handling of big data challenges that were previously insurmountable.

C. Practical Applications of Big Data Technologies

Big data technologies find applications in diverse fields such as healthcare, finance, and e-commerce. They enable us to extract valuable insights from data that was once deemed too vast and unwieldy for analysis.

VIII. Ethical Considerations in Advanced Data Science

As data science advances, ethical considerations become even more pivotal. We must navigate issues of bias, privacy, and transparency with heightened sensitivity and responsibility.

A. Addressing Ethical Challenges in Advanced Data Analysis

Advanced data analysis may inadvertently perpetuate biases or raise new ethical dilemmas. Acknowledging and confronting these challenges is the initial step toward conducting ethical data science.

B. Ensuring Fairness and Transparency in Complex Models

Complex models can be opaque, making it challenging to comprehend their decision-making processes. Ensuring fairness and transparency in these models is a pressing concern that underscores the ethical responsibilities of data scientists.

C. The Responsibility of Data Scientists in Handling Sensitive Data

Data scientists shoulder a profound responsibility when handling sensitive data. Employing advanced encryption techniques and data anonymization methods is imperative to safeguard individual privacy and uphold ethical standards.

IX. The Journey Continues: Lifelong Learning and Staying Updated

In the realm of advanced data science, learning is an unending odyssey. Staying abreast of the latest advancements is not just valuable; it's imperative to remain at the vanguard of the field.

A. Embracing the Mindset of Continuous Learning in Advanced Data Science

Continuous learning isn't a choice; it's a necessity. As data science continually evolves, so must our skills and knowledge. To stand still is to regress.

B. Resources and Communities for Staying Updated with the Latest Advancements

The ACTE Institute provides an array of resources, from books and Data science courses to research papers and data science communities, offers a wealth of opportunities to remain informed about the latest trends and technologies.

C. Personal Anecdotes of Growth and Adaptation in the Field

My expedition into advanced data science has been replete with moments of growth, adaptation, and occasionally, setbacks. These experiences have profoundly influenced my approach to confronting complex data challenges and serve as a testament to the continuous nature of learning.

In conclusion, the journey into advanced data science is an exhilarating odyssey. It's a voyage that plunges us into the deepest recesses of data, where we unearth insights that possess the potential to transform industries and society at large. As we reflect on the indispensable role of essential data science tools, we comprehend that the equilibrium between tools and creativity propels us forward. The data universe is boundless, and with the right tools and an insatiable curiosity, we are poised to explore its ever-expanding horizons.

So, my fellow data enthusiasts, let us persist in our exploration of the data universe. There are discoveries yet to be unearthed, solutions yet to be uncovered, and a world yet to be reshaped through the power of data.

8 notes

·

View notes

Text

What is Data Analytics in simple words?

Descriptive analytics: – The most basic type of data analytics analyses historical data to identify patterns and relationships. An example of descriptive analytics is analysing sales data to understand trends in monthly revenue. By examining historical sales figures, a company can identify patterns, seasonality, and peak sales periods, helping them make informed decisions about inventory management, marketing strategies, and sales forecasting.

Diagnostic analytics: – Helps businesses understand why things happen by examining data to identify patterns, trends, and connections. For example, data analysts may identify anomalies in the data, collect data related to these anomalies, and implement statistical techniques to find relationships and trends that explain the anomalies.

Predictive analytics: – Uses data analysis, machine learning, artificial intelligence, and statistical models to find patterns that might predict future behaviour.

Prescriptive analytic:– A statistical method that uses both descriptive and predictive analytics to find the ideal way forward or action necessary for a particular scenario. Prescriptive analytics focuses on actionable insights rather than data monitoring.

I hope this blog finds you well and proves to be a valuable resource in your quest for knowledge. If you want to become a data analytics then you must join our Data analytics course. May the information you seek, guide you toward success and growth, Thank you for exploring, and May you find it truly beneficial.

link source:-https://www.dicslaxminagar.com/blog/what-is-data-analytics-in-simple-words/

2 notes

·

View notes

Text

Unleashing the Power of Data Analytics & Reporting: A Guide to Enhanced Decision-Making

In today's fast-paced business landscape, data reigns supreme. Every click, purchase, and interaction generate a wealth of valuable information waiting to be analysed and utilized. The ability to harness this data effectively through analytics and reporting can make all the difference between thriving and merely surviving in the competitive marketplace.

The Role of Data Analytics & Reporting

Data analytics involves examining raw data to draw conclusions and insights, while reporting involves presenting these findings in a structured format to facilitate decision-making. Together, they provide businesses with the necessary tools to understand customer behaviour, optimize processes, and drive strategic initiatives.

Importance of Data Analytics:

1. Informed Decision-Making: By analysing past trends and patterns, businesses can make data-driven decisions rather than relying on intuition or guesswork.

2. Competitive Advantage: Companies that leverage data analytics gain a competitive edge by identifying opportunities for growth, improving efficiency, and mitigating risks.

3. Enhanced Customer Experience: Understanding customer preferences and behavior enables personalized marketing efforts, leading to higher customer satisfaction and loyalty.

Significance of Reporting:

1. Communication of Insights: Reports serve as a means to communicate key findings, trends, and metrics to stakeholders, facilitating understanding and alignment across the organization.

2. Performance Tracking: Regular reporting allows businesses to monitor performance against goals and objectives, enabling timely adjustments and course corrections.

3. Compliance and Accountability: Reporting plays a crucial role in meeting regulatory requirements, ensuring transparency, and holding individuals and departments accountable for their actions.

Leveraging Outsourcingwise Consulting's Training for Data Analytics & Reporting

In the realm of data analytics and reporting, staying abreast of the latest tools, techniques, and best practices is essential for success. This is where Outsourcingwise Consulting's training programs come into play, offering comprehensive courses designed to equip professionals with the skills needed to excel in this field.

Cutting-Edge Curriculum:

Outsourcingwise Consulting's training curriculum covers a wide range of topics, including:

- Data Collection and Preparation: Understanding data sources, cleaning, and transforming raw data into usable formats.

- Statistical Analysis: Applying statistical methods to uncover patterns, correlations, and trends within the data.

- Data Visualization: Communicating insights effectively through charts, graphs, and dashboards.

- Reporting Tools: Utilizing industry-leading reporting tools such as Tableau, Power BI, and Google Data Studio to create compelling reports.

- Advanced Analytics Techniques: Delving into predictive analytics, machine learning, and AI for more sophisticated analysis and forecasting.

Hands-On Learning:

One of the key features of Outsourcingwise Consulting's training programs is their emphasis on practical, hands-on learning. Participants could work on real-world projects, applying their newly acquired skills to solve actual business problems. This experiential learning approach ensures that participants are not just theory-smart but also equipped with the practical know-how to tackle challenges in the workplace.

Expert Guidance:

The trainers at Outsourcingwise Consulting are seasoned professionals with extensive experience in the field of data analytics and reporting. They bring their wealth of knowledge and expertise to the training sessions, providing valuable insights, tips, and guidance to participants. Whether you're a beginner looking to build a solid foundation or an experienced professional seeking to deepen your skills, the trainers at Outsourcingwise Consulting are committed to helping you achieve your goals.

Career Advancement Opportunities:

In today's job market, proficiency in data analytics and reporting is in high demand across industries. By completing Outsourcingwise Consulting's training programs, participants not only enhance their skill set but also position themselves for career advancement opportunities. Whether it's securing a promotion within their current organization or transitioning to a new role in a different industry, the skills acquired through Outsourcingwise Consulting's training can open doors to exciting career prospects.

Conclusion

In conclusion, data analytics and reporting play a pivotal role in driving informed decision-making, enhancing organizational performance, and gaining a competitive edge in the marketplace. By leveraging Outsourcingwise Consulting's training programs, professionals can acquire the skills and knowledge needed to excel in this dynamic and rapidly evolving field. Whether you're a business leader seeking to unlock the full potential of your data or an aspiring data analyst looking to kick-start your career, Outsourcingwise Consulting offers the expertise and resources to help you succeed. Embrace the power of data analytics and reporting today, and chart a course towards a brighter, more data-driven future.

#DataAnalyticsTraining#AnalyticsEducation#DataTraining#DataScienceEducation#AnalyticsWorkshops#DataLearning#DataSkills#AnalyticsCertification#DataProfessionals#DataDrivenDecisions#AnalyticsConsulting#DataInsights#DataStrategy#BigDataTraining#DataAnalyticsBootcamp#DataVisualization#MachineLearningTraining#BusinessAnalytics#DataProficiency#AnalyticsTutorials

2 notes

·

View notes

Text

Predictive vs Prescriptive vs Descriptive Analytics Explained

Business analytics leveraging data patterns for strategic moves comes in three key approaches – descriptive identifying “what has occurred", predictive forecasting “what could occur” and prescriptive recommending “what should occur” to optimize decisions. We decode the science behind each for aspiring analytics professionals.

Descriptive analytics convert volumes of historical data into insightful summaries around metrics revealing business health, customer trends, operational efficiencies etc. using direct analysis, aggregation and mining techniques producing current reports.

Predictive analytics forecast unknown future probabilities applying statistical, econometric and machine learning models over existing data to minimize uncertainties and capture emerging behaviors early for mitigation actions. Risk models simulate scenarios balancing upside/downside tradeoffs.

Prescriptive analytics take guidance one step further by dynamically recommending best decision options factoring in key performance indicators for business objective improvements after predicting multiple futures using bell curve simulations. Optimization algorithms deliver preferred actions.

While foundational data comprehension and wrangling abilities fuel all models – pursuing analytics specializations focused on statistical, computational or operational excellence boosts career-readiness filling different priorities global employers seek!

Posted By:

Aditi Borade, 4th year Barch,

Ls Raheja School of architecture

Disclaimer: The perspectives shared in this blog are not intended to be prescriptive. They should act merely as viewpoints to aid overseas aspirants with helpful guidance. Readers are encouraged to conduct their own research before availing the services of a consultant.

#analytics#types#predictive#prescriptive#descriptive#PrescriptiveAnalytics#StrategicMoves#AnalyticsProfessionals#DataScience#HistoricalData#Metrics#BusinessHealth#CustomerTrends#OperationalEfficiencies#StatisticalModels#EconometricModels#MachineLearningModels#EnvoyOverseas#EthicalCounselling#EnvoyInternationalStudents#EnvoyCounselling

4 notes

·

View notes

Text

Unveiling Market Insights: Exploring the Sampling Distribution, Standard Deviation, and Standard Error of NIFTY50 Volumes in Stock Analysis

Introduction:

In the dynamic realm of stock analysis, exploring the sampling distribution, standard deviation, and standard error of NIFTY50 volumes is significant. Providing useful tools for investors, these statistical insights go beyond abstraction. When there is market volatility, standard deviation directs risk evaluation. Forecasting accuracy is improved by the sample distribution, which functions similarly to a navigational aid. Reliability of estimates is guaranteed by standard error. These are not only stock-specific insights; they also impact portfolio construction and enable quick adjustments to market developments. A data-driven strategy powered by these statistical measurements enables investors to operate confidently and resiliently in the financial world, where choices are what determine success.

NIFTY-50 is the tracker of Indian Economy, the index is frequently evaluated and re-equalizing to make sure it correctly affects the shifting aspects of the economic landscape in India. Extensively pursued index, this portrays an important role in accomplishing, investment approach ways and market analyses.

Methodology

The data was collected from Kaggle, with the (dimension of 2400+ rows and 8 rows, which are: date, open, close, high, low, volume, stock split, dividend. After retrieving data from the data source, we cleaned the null values and unnecessary columns from the set using Python Programming. We removed all the 0 values from the dataset and dropped all the columns which are less correlated.

After completing all the pre-processing techniques, we imported our cleaned values into RStudio for further analysis of our dataset.

Findings:

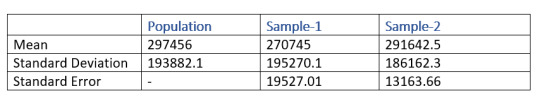

Our aim lies in finding how the samples are truly representing the volume. So, for acquiring our aim, we first took a set of samples of sizes 100 and 200 respectively. Then we performed some calculations separately on both of the samples for finding the mean, standard deviation, sampling distribution and standard error. At last we compared both of the samples and found that the mean and the standard deviation of the second sample which is having the size of 200 is more closely related to the volume.

From the above table, the mean of the sample-2 which has a size of 200 entity is 291642.5 and the mean of the sample-1 is 270745. From this result, it is clear that sample-2 is better representative of the volume as compared to sample-1

Similarly, when we take a look at the standard error, sample-2 is lesser as compared to sample-1. Which means that the sample-2 is more likely to be closer to the volume.

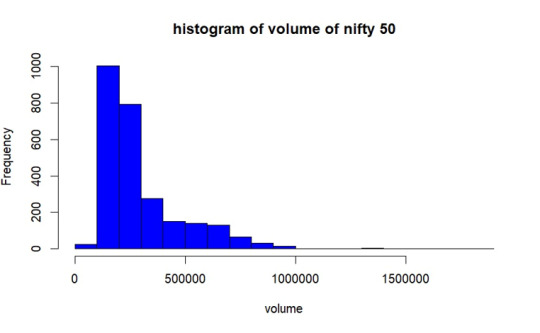

Population Distribution.

As per the graph, In most of the days from the year 2017 to 2023 December volume of trading of NIFTY50 was between 1lakh- 2.8lakhs.

Sample Selection

We are taking 2 sample set having 100 and 200 of size respectively without replacement. Then we obtained mean, standard deviation and standard error of both of the samples.

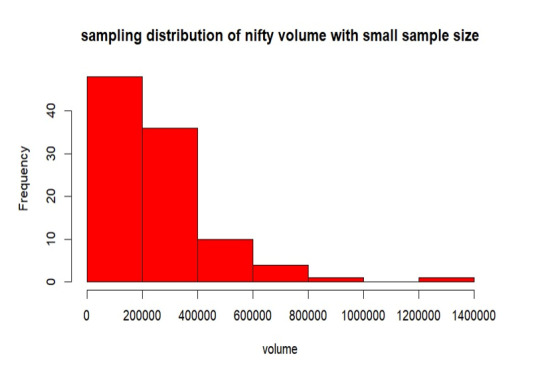

Sampling Distribution of Sample- 1

From the above graph, the samples are mostly between 0 to 2 lakhs of volume. Also, the samples are less distributed throughout the population. The mean is 270745, standard deviation is 195270.5 and the standard error of sampling is 19527.01.

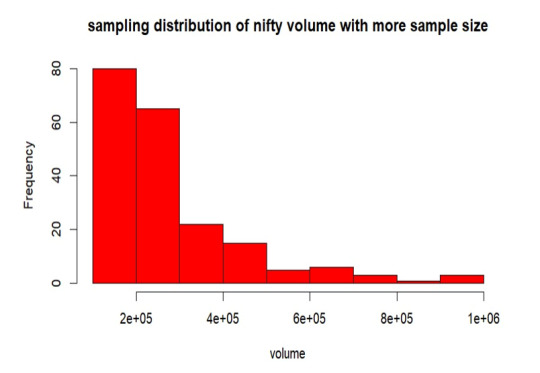

Sampling Distribution of Sample- 2

From the above graph, the samples are mostly between 0 to 2 lakhs of volume. Also, the samples are more distributed than the sample-1 throughout the volume. The mean is 291642.5, standard deviation is 186162.3 and the standard error of sampling is 13163.66.

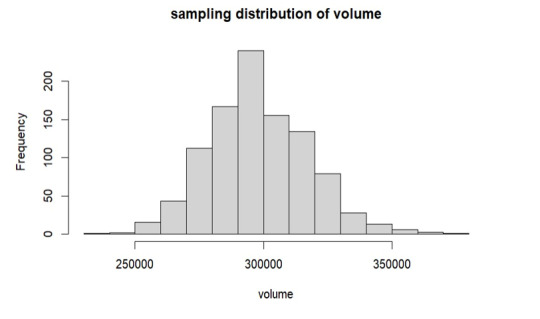

Replication of Sample- 1

Here, we are duplicating the mean of every sample combination while taking into account every conceivable sample set from our volume. This suggests that the sample size is growing in this instance since the sample means follow the normal distribution according to the central limit theorem.

As per the above graph, it is clear that means of sample sets which we have replicated follows the normal distribution, from the graph the mean is around 3 lakhs which is approximately equals to our true volume mean 297456 which we have already calculated.

Conclusion

In the observed trading volume range of 2 lakhs to 3 lakhs, increasing the sample size led to a decrease in standard error. The sample mean converges to the true volume mean as sample size increases, according to this trend. Interestingly, the resulting sample distribution closely resembles the population when the sample mean is duplicated. The mean produced by this replication process is significantly more similar to the population mean, confirming the central limit theorem's validity in describing the real features of the trade volume.

2 notes

·

View notes

Text

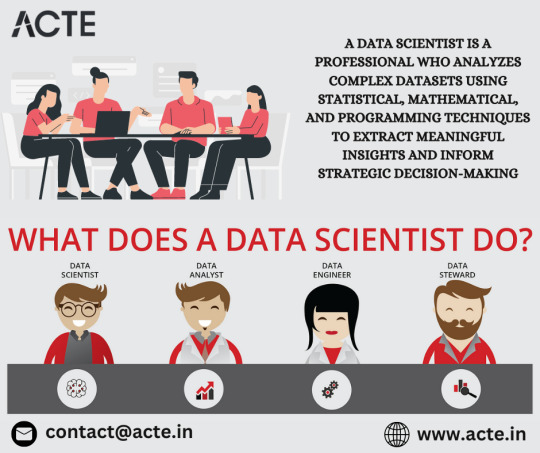

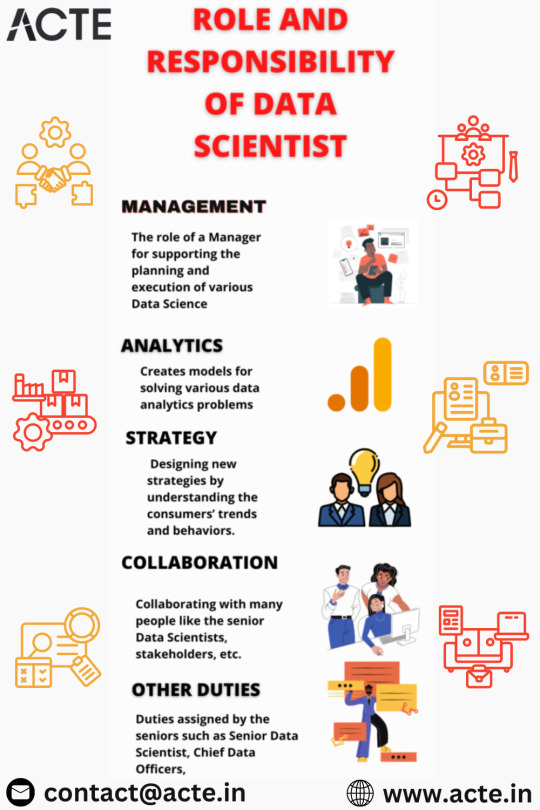

From Exploration to Enchantment: Unveiling the Artistry of Data Science

In the dynamic realm of technology, where data has become the lifeblood of decision-making, data scientists emerge as the modern-day wizards wielding statistical prowess, machine learning magic, and programming spells. Choosing the Best Data Science Institute can further accelerate your journey into this thriving industry. But what exactly does a data scientist do, and how do they weave their spells to extract meaningful insights from the vast tapestry of data? Let's embark on a journey to demystify the role and responsibilities of a data scientist.

1. Data Exploration and Cleaning: Navigating the Data Seas

The odyssey begins with a deep dive into the vast data seas. Data scientists embark on a voyage of exploration, understanding the intricacies of datasets, identifying patterns, and cleansing the data to ensure its accuracy and relevance. This phase lays the foundation for the subsequent stages of analysis.

2. Statistical Analysis: Illuminating Patterns in the Darkness

Armed with statistical techniques, data scientists illuminate the darkness within the data. They apply statistical analysis to unveil trends, patterns, and relationships, transforming raw data into actionable insights. This statistical alchemy provides the groundwork for making informed decisions.

3. Machine Learning: Casting Predictive Spells

The heart of the data scientist's craft lies in machine learning—the art of teaching machines to learn from data. They develop and implement machine learning models, predicting outcomes, classifying data, or uncovering hidden patterns. It's a realm where algorithms become the guiding stars.

4. Data Visualization: Painting a Picture for All

To communicate the magic discovered within the data, data scientists harness the power of data visualization. Through charts, graphs, and dashboards, they paint a vivid picture, making complex insights accessible and compelling for both technical and non-technical stakeholders.

5. Feature Engineering: Crafting the Building Blocks

Much like a skilled architect, a data scientist engages in feature engineering. They carefully select and transform relevant features within the data, enhancing the performance and predictive power of machine learning models.

6. Predictive Modeling: Foretelling the Future

The crystal ball of a data scientist is the predictive model. Building upon historical data, they create models to forecast future trends, behaviors, or outcomes, guiding organizations towards proactive decision-making.

7. Algorithm Development: Coding the Spells

In the enchanting world of data science, programming becomes the language of spellcasting. Data scientists design and implement algorithms, utilizing languages such as Python or R to extract valuable information from the data tapestry.

8. Collaboration: Bridging Realms

No sorcerer works in isolation. Data scientists collaborate with cross-functional teams, translating data-driven insights into tangible business objectives. This collaboration ensures that the magic woven from data aligns seamlessly with the broader goals of the organization.

9. Continuous Learning: Evolving with the Arcane

In a landscape where technological evolutions are constant, data scientists embrace continuous learning. They stay abreast of the latest developments in data science, machine learning, and relevant technologies to refine their craft and adapt to the ever-changing tides of industry trends.

10. Ethical Considerations: Guardians of Data Integrity

As stewards of data, data scientists bear the responsibility of ethical considerations. They navigate the ethical landscape, ensuring the privacy and security of data while wielding their analytical powers for the greater good.

In conclusion, a data scientist's journey is one of constant exploration, analysis, and collaboration—a symphony of skills and knowledge harmonized to reveal the hidden melodies within data. In a world where data has become a strategic asset, data scientists are the custodians of its magic, turning raw information into insights that shape the destiny of organizations. Choosing the best Data Science Courses in Chennai is a crucial step in acquiring the necessary expertise for a successful career in the evolving landscape of data science.

4 notes

·

View notes

Text

Data Science in Marketing

Data Science in Marketing

Have you ever bought something online, only to have ads for similar items follow you around the internet? This is a common example of how data science is being used in marketing. Many of us engage with data-driven marketing applications on a regular basis, often without realizing that our decisions are influenced by them.

Data science in marketing involves the use of advanced analytics, machine learning algorithms, and statistical models to interpret complex datasets. This process provides marketers with actionable insights, enabling them to make informed decisions and develop strategies that resonate with their target audience. From customer segmentation to predictive modeling, data science techniques empower marketers to unlock valuable patterns and trends within their data.

One fundamental application of data science in marketing is customer segmentation. By leveraging clustering algorithms, marketers can categorize their audience into distinct groups based on shared characteristics, behaviors, and preferences. This allows for highly targeted and personalized marketing campaigns, as evidenced by a study conducted by McKinsey & Company [1]. The report emphasizes that companies implementing data-driven segmentation strategies witness a significant boost in customer engagement and satisfaction.

Moreover, predictive modeling is another area where data science proves its worth in marketing. Through predictive analytics, businesses can forecast future trends, customer behaviors, and market dynamics. This capability is exemplified in a case study by IBM [2], where a major retailer utilized predictive modeling to optimize its inventory management and anticipate customer demands. The result was not only a reduction in excess inventory but also a substantial increase in overall sales.

Data science's role in digital marketing assumes a more prominent stance. Algorithms powering recommendation engines, personalized content delivery, and dynamic pricing strategies are all manifestations of data-driven decision-making. Amazon, a pioneer in utilizing data science in its marketing approach, provides a noteworthy example. The e-commerce giant leverages machine learning algorithms to analyze customer data which include browsing and purchasing patterns, tailoring product recommendations with remarkable accuracy [3]. All the analyzed data are then leveraged via Amazon’s recommendation engine. Every time a user searches for a specific product, this data helps the platform predict what else the user will have interest in. This in turn allows Amazon to enhance their procedure of convincing the consumer into purchasing other products.